Red Team has been long associated with the military. These terms are commonly used to describe teams that use their skills to imitate the attack techniques that “enemies” might use, and other teams that use their skills to defend.

Blue Team members are, by definition, the internal cybersecurity staff, whereas the Red Team is the external entity with the intent to break into the system. The red team is hired to test the effectiveness of the Blue Team by emulating the behaviors of a real black-hat hack group, to make the attack as realistic and as chaotic as possible to challenge both teams equally.

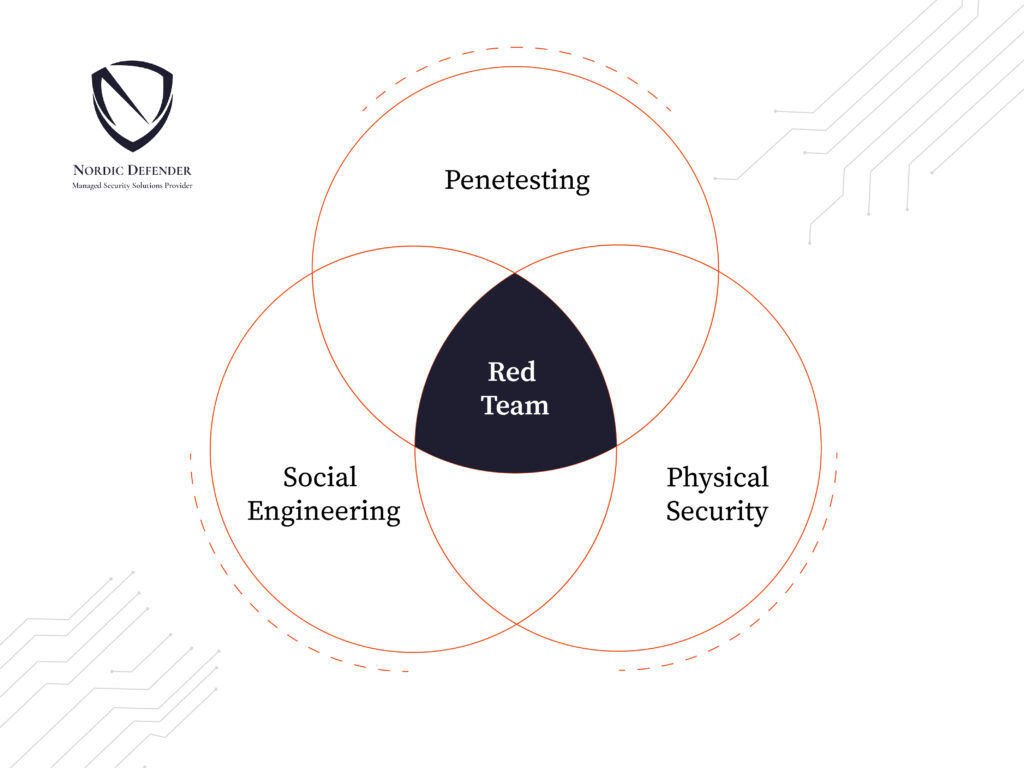

The Red Team may try to intrude the network, systems, and other digital assets in various ways, such as phishing, vishing, vulnerability identification, firewall intrusion, and so on. On the other hand, the Blue Team tries to stop these stimulated attacks. By doing so, the defensive team learns to react and defend varied situations.

Red Teaming At a High Level

Before I dig deep into the cyber aspects of Red Teaming, let’s explore Red Teaming at a high level. Red Teaming is the practice of attacking problems from an adversarial point of view. It is a mindset that is used to challenge an idea to help prove its worth, find weaknesses, or identify areas to improve.

When people develop complex systems, design, ideas, and implementation are typically performed by skilled trusted professionals. These individuals are well respected and trusted in their field and highly capable of designing and developing functional systems.

Although these systems are highly functional and capable, the ideas, concepts, and thoughts can sometimes be ‘boxed’ in leading to incorrect assumptions about how a system truly operates. Systems are built by people and people make assumptions about capability, functionality, and security. These assumptions lead to flaws that a threat may take advantage of.

What Types Of Organizations Are Good Candidates?

Red Teaming has several goals and can offer tremendous value if applied properly.

A regular rule is that the Red team, when examining and measuring an organization’s security, would best perform and operate on a mature organization. These are organizations that have good security hygiene and vulnerability assessment and penetration tests on a regular basis, thus making them a perfect candidate for a Red team engagement.

Engagements are designed to examine an operation’s security efficiency and help validate the effectiveness of security processes and controls. Because of not practicing good security hygiene, less mature organizations are not qualified candidates for a full Red team engagement, nonetheless, they could benefit from Red Team’s assistance to move toward readiness and preparations.

Aside from measuring the security, a Red team performs various other operations and is effective in numerous ways. Red team engagement is qualified to support the Blue team in their training and their efficiency. They challenge and test common wisdom and thought by emulating custom scenarios of specific attacks.

A Successful Red Team Operator

A crucial factor of a successful Red Team operator is having an adversarial mindset. An operator must think critically, out of the box, and have the skills needed to act on their ideas to bring value to its team engagement. Acting and thinking as an enemy are fundamental for a Red Teamer so they could design and execute attacks against a system as a real threat would.

To improve your security and your system, you must think like an enemy and use their tactics to test the traditional thoughts and ideas of a bad hacker, no matter how silly they seem.

A Red Team operator only acts like a “bad guy” and is not really one. It is crucial for a Red Teamer to think and act like an enemy and not to be reckless during an engagement. Red Team operators are not the same as security testers. Red Teamers must maintain control of their actions and understand the potential risks associated with each.

Red Team goals are focused on operations. The goals are based on the story of the engagement for the sole purpose of measuring the overall security stance of an organization’s defenses. For example, when emulating a threat, the Red Team will use TTP that represents that threat. Other measurements like “How many systems are currently patched” are not related to this context and need to be identified in other fields.

Minimize Artificial Restriction

Depending on the security maturity of a target, Red Team engagements can sometimes be stressful and difficult. The Blue Team may have a desire to win, and defeat the Red Team, which may seem to be a good goal but if odds are stacked against Red, and they are unable to execute as a real adversary, what is gained by Blue?

An organization has to minimize artificial constraints so Red Teams can operate without restrictions. There should be pressure applied on the Blue Team, without destroying everything, so they can efficiently expose and evaluate the impacts of a real threat.

Both teams need to focus on their operational goals. The Red team needs to be in control of its actions and understand the risks associated with them. When a Red Team is about to perform a dangerous action, they should discuss the risks with the control group who are referred to as white cells to ascertain the best action to know whether to push forward or it is too risky to continue. In case it was too much of a risk to continue, the result may need to be effected through white carding.

If the Red Team is limited in tools and techniques, they won’t be able to operate or successfully emulate an adversary. Because some operations may run 24 hours a day, do not limit assessment hours and allow the Red Team to adjust their schedule as needed. It is important that the Red Team is able to operate during the hours they need to achieve their goals.

While we should not limit the Red Team, it is also essential to know that they do not have unhindered freedom. Our goal is to create adversarial emulation and minimizing risks. Although it is not a true adversary, a Red Team is bound to limit negative action although it is not a true adversary by identifying risky areas or document potential results.